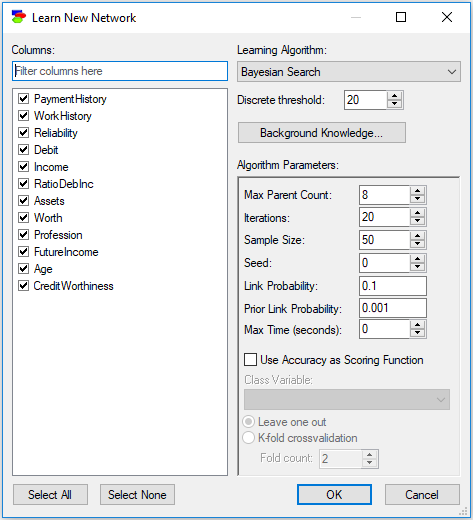

The Bayesian Search structure learning algorithm is one of the earliest and the most popular algorithms used. It was introduced by (Cooper & Herkovitz, 1992) and was refined somewhat by (Heckerman, 1995). It follows essentially a hill climbing procedure (guided by a scoring heuristic, which in GeNIe is the log-likelihood function) with random restarts. Here is the Learn New Network dialog for the Bayesian Search algorithm:

The Bayesian Search algorithm has the following parameters:

•Max Parent Count (default 8) limits the number of parents that a node can have. Because the size of conditional probability tables of a node grow exponentially in the number of the node's parents, it is a good idea to put a limit on the number of parents so that the construction of the network does not exhaust all available computer memory.

•Iterations (default 20) sets the number of restarts of the algorithm. Generally, the algorithm is searching through a hyper-exponential search space and its goal can be compared to searching for a needle in a haystack. Restarts allow for probing more areas of the search space and increase the chance of finding a structure that will fit the data better. We advise to make this number as large as we can afford it in terms of running time. The default number of iterations should give you an idea of how long the algorithm will take when the number of iteration is larger. The computing time is roughly linear in the number of iterations.

•Sample size (default 50) takes part in the score (BDeu) calculation, representing the inertia of the current parameters when introducing new data.

•Seed (default 0), which is the initial random number seed used in the random number generator. If you want the learning to be reproducible (i.e., you want to obtain the same result every time you run the algorithm), use the same Seed. Seed equal to zero (the default) makes the random number generator really random by starting it with the current value of the processor clock, so please try a different value for reproducibility of results.

•Link Probability (default 0.1) is a parameter used when generating a random starting network at the outset of each of the iterations. It essentially influences the connectivity of the starting network.

•Prior Link Probability (default 0.001) influences the (BDeu) score, by offering a prior over all edges. It comes into the formula in the following way: log Posterior score = log marginal likelihood (i.e., the BDeu) + |parents|*log(pll) + (|nodes|-|parents|-1)*log(1-pll)).

•Max Time (seconds) (default 0, which means no time limit) sets a limit on the run time of the algorithm. It is a good idea to set a limit for any sizable data set so as to have the algorithm terminates within a reasonable amount of time.

•Use Accuracy as Scoring Function (default off). When checked, the algorithm will use the classification accuracy as the scoring function in search for the optimal graph. The user has to specify the class variable and the cross-validation technique (Leave one out or K-fold cross-validation, the former being a special case of the latter, when the Fold count is equal to the number of records in the data set). When this option is used, GeNIe uses classification accuracy only to compare the networks generated by (independent) iterations of BS. The actual inner work of a single BS iteration is not influenced by this option.

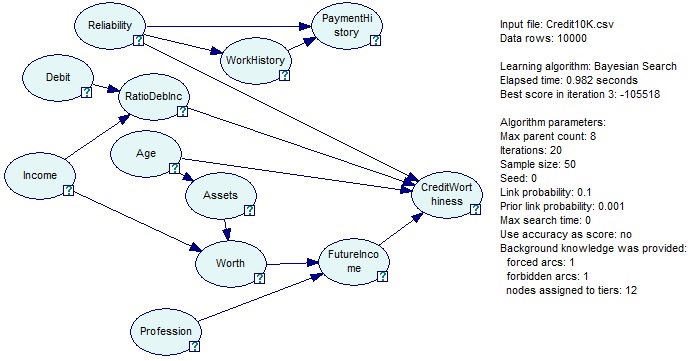

The algorithm produces an acyclic directed graph that achieves the highest score. The score is proportional to the probability of the data given the structure, which, assuming that we assign the same prior probability to any structure, is proportional to the probability of the structure given the data. The algorithm produces an on-screen text box that includes the settings of all parameters of the BS algorithms.

Because the Bayesian Search algorithm produces an acyclic directed graph, it is a good idea to investigate the theoretical limits to what it can identify based on the data. To this effect, we advise the user to transform the acyclic directed graph of a Bayesian network to the Pattern Editor dialog.